Decoding the US Regulatory Landscape for AI Adoption in Banking

Contents

- Regulations on AI Adoption in Banking: What We Know

- Regulatory Direction: A Comparison with the EU AI Act

- Future Outlook

- Conclusion

Artificial intelligence (AI) has been used in banking for decades now. AI-assisted decision-making, machine learning-led automation, and chatbots, have all been around and found their place in making banking more efficient and scalable.

What’s changed is the sophistication of our computing power and, of course, Gen AI.

2023 saw a series of AI-focused educational briefings in the US Senate, including a classified all-senators briefing and nine AI Insight Forums. In December 2023, the Financial Stability Oversight Council highlighted AI as an emerging risk, underscoring the seriousness with which regulators are treating this technology.

The Congress views AI as a national security risk, and no regulations have been introduced by the banking regulatory bodies specific to AI. In addition, there is a clear focus on establishing frameworks on how AI systems should be designed, and what the security guardrails should look like. These frameworks should be baked into all AI adoption strategies at financial institutions.

There are also some common themes emerging around consumer protection and data privacy that can inform best practices for AI-powered products or services. In this blog, I highlight what financial institutions can adopt from the available frameworks and guidance and some insights on how the American approach to AI regulation compares directionally to the one in Europe.

Regulations on AI Adoption in Banking: What We Know

As AI use cases advance from simpler to more complex tasks, bankers and banking regulators are concerned about business risks. A 2024 survey of 127 American banking professionals found that 80% expressed concerns about the potential for bias in general AI models and hence into decision-making; 77% on the degradation of client trust and transparency; 73% about exposing customer data or providing a point of vulnerability for cyberattacks2.

Similar concerns show up in the regulations being discussed in the legislative sessions. Congress has already introduced over 40 AI-related bills in 2024, focusing primarily on common themes to ensure AI is used responsibly and safely across the country. The following are themes relevant to the financial industry and corresponding regulatory developments in the US:

Privacy and data protection:

Banks and credit unions hold a treasure trove of personal data, and there’s a growing push to ensure that this data is adequately protected. The latest Congressional efforts are laying the groundwork for what could finally lead to a national privacy law in the U.S.—a development that’s been brewing for years.

The Biden administration further emphasized this need with an executive order in late 2022, alongside the unveiling of the AI Bill of Rights, which serves as a guide to safeguarding privacy and civil rights while ensuring AI tools are fair and accurate.

Transparency and effectiveness:

For banks and credit unions, the most immediate concern comes from the Consumer Financial Protection Bureau (CFPB). Their recent focus on chatbots highlights the potential pitfalls of AI in customer service, including deceptive practices and customer frustration. Financial institutions deploying AI-powered chatbots should therefore be particularly vigilant about transparency and effectiveness.

Accountability:

Holding AI systems accountable is a top priority, especially in sensitive areas like credit scoring. The goal is to protect against bias and discrimination, ensuring that AI decisions are fair, transparent, and equitable. Specifically, financial institutions must consider a broader range of regulatory implications when implementing AI:

- Privacy laws like GDPR and the California Consumer Privacy Act

- Fair lending considerations under the Fair Credit Reporting Act

- Disclosure requirements under the Truth in Lending Act

- Comprehensive risk management and assessment protocols

Third-party risk assessment: Perhaps most critically, regulators are emphasizing third-party risk management. As banks increasingly rely on external AI providers, robust oversight and risk mitigation strategies for these partnerships are essential.

Regulatory Direction: A Comparison with the EU AI Act

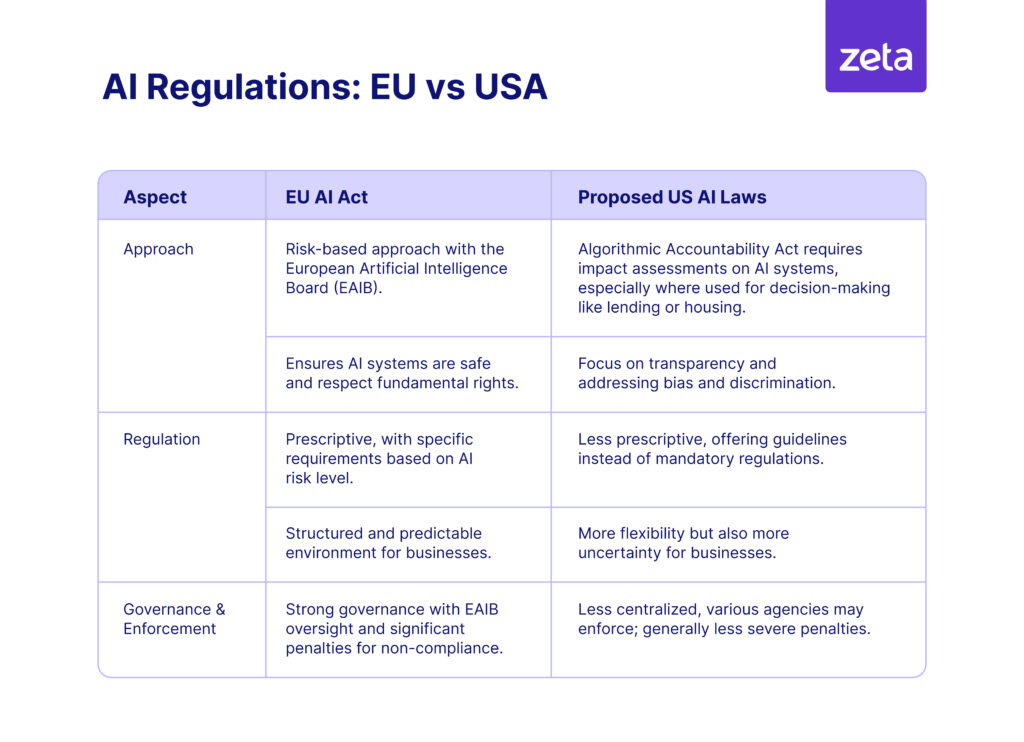

The European Union has been setting the pace globally with its AI Act, which is acknowledged as the most comprehensive AI framework today. This legislation is designed to regulate high-risk AI systems, with a particular focus on banking. The AI Act categorizes AI applications by risk level and imposes strict rules to ensure transparency and accountability. It is anticipated that the EU AI Act will have the most global impact because of the size of the European market, and the far reach it has into other parts of the world.

Directionally, the current approach within the US differs from the one taken by the EU as shown in the image below:

Image 1

Globally the challenge for every region will be keeping up with rapidly evolving technological developments and harmonizing risk management across borders.

Future Outlook

Looking ahead, several trends are likely to shape the future of AI regulation for banking in the US:

- Harmonization of standards: There’s likely to be a push to harmonize AI regulations across borders, making it easier for banks to operate globally. Organizations like the Global Partnership on Artificial Intelligence (GPAI) could lead the charge here5, creating a unified approach to AI oversight.

- Explainability and transparency: Future regulations are expected to emphasize the need for explainable AI. In banking, this means AI systems will need to be crystal clear about how they make decisions.

- Ethical AI frameworks: There will be a stronger emphasis on ensuring that AI is used ethically in banking. This means developing frameworks to prevent bias and ensure that AI systems align with societal values and norms. This will include frameworks to ensure non-discrimination, fairness, and the protection of human rights6.

Conclusion

AI is transforming the banking industry rapidly, and regulatory frameworks need to evolve to keep pace. By staying ahead of these trends, banks and other stakeholders can navigate the complexities of AI regulation and unlock its full potential. The key to success lies in balancing robust compliance with a spirit of innovation.

References:

- Tech Policy.Press, US Senate AI Working Group Releases Policy Roadmap | May 2024

- American Banker, Preparing for banking’s AI revolution | American Banker | March 2024

- NCSL.org, Summary Artificial Intelligence 2024 Legislation | June 2024

- The White House, Blueprint for an AI Bill of Rights | 2022

- Bank Policy Institute, Navigating Artificial Intelligence in Banking | 2024

- Loeb & Loeb, A Look Ahead: Opportunities and Challenges of AI in the Banking Industry | 2024